Supervise all the things!

One of the reasons why Elixir is gaining more and more traction is the way it handles errors. Being able to naturally recover from failures encourages coding for the happy path first and letting the code fail if things go wrong. You have probably heard about the famous nine nines of Erlang. Achieving that level of uptime wouldn't be possible if it wasn't for the ability of the code to self-heal and recover from failures.

Building highly available applications is one of the areas in which Elixir truely shines and one of the reasons for it is supervisors. Supervisors monitor your Elixir processes and resurrect them with known state in case of a crash. They are what makes the let it crash philosophy possible.

There are a number of ways a supervisor can restart its children and they are known as strategies. In this article we are going to have a closer look at different supervision strategies available in Elixir and the differences between them.

It all starts with a broken process

In order to make the rest of the article more concrete let's build a process which we'll use to demonstrate how supervisors work. The process is not going to be very useful, it is going to wait a random number of seconds and then crash.

# boom.ex

defmodule Boom do

use GenServer

def start_link(number) do

GenServer.start_link(__MODULE__, number)

end

@impl true

def init(number) do

IO.puts "Starting process #{inspect(self())}, number #{number}"

Process.send_after(self(), :boom, Enum.random(1000..10000))

{:ok, number}

end

@impl true

def handle_info(:boom, number) do

IO.puts "#####"

IO.puts "Terminating process #{inspect(self())}, number #{number}"

Process.exit(self(), :boom)

end

end

Whenever the process starts it sends itself a message which will cause it to terminate. The message is delayed by a random length of time between 1 and 10 seconds. Thanks to this we will be able to see different processes terminating at random times independently of each other. The only piece of state that the process holds is a number which we'll use along with a PID to tell different processes apart when logging messages to the console.

We'll spin up a few instances of this process and supervise them using different supervision strategies. As the instances crash, we'll see processes being killed and new ones started. We are going to try out all three strategies that the Supervisor module provides, i.e.:

one_for_one,one_for_all,one_for_rest.

Additionally, there is also the DynamicSupervisor which we'll have a brief look at too.

One for one

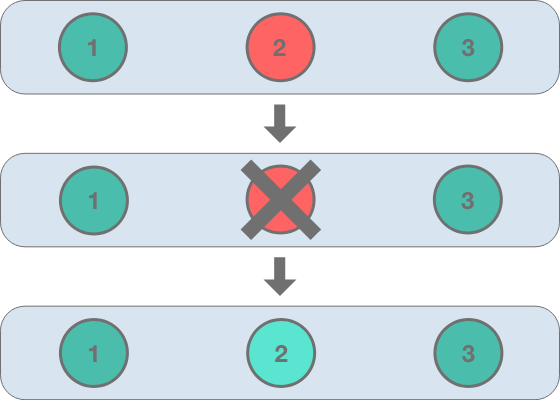

This strategy is perhaps what we would naturally expect from a supervisor. Whenever a processes crashes a supervisor using one_for_one strategy spins up a new process to replace it. Other processes that the supervisor looks after remain unaffected.

This is summarised in the image below. It shows three processes linked to a supervisor. When process number 2 crashes it is replaced by a new instance:

Let's see this mechanism in action. Open up iex and compile the file with the Boom module:

$ iex

Interactive Elixir (1.6.5) - press Ctrl+C to exit (type h() ENTER for help)

iex(1)> c "boom.ex"

[Boom]

Now that we have our module loaded into memory let's go head and start three new processes under a supervisor using the one_for_one strategy:

Supervisor.start_link(

[

Supervisor.child_spec({Boom, 1}, id: :boom_1),

Supervisor.child_spec({Boom, 2}, id: :boom_2),

Supervisor.child_spec({Boom, 3}, id: :boom_3)

],

strategy: :one_for_one,

max_restarts: 10

)

Notice how we are setting max_restarts to 10. This is so that the supervisor itself doesn't crash bringing the entire iex process down with it. max_restarts is by default set to 3 which means the supervisor should crash if it needs to restart a processes more than 3 times within a default 5 seconds time frame. Because we are randomly crashing our processes once every 1 to 10 seconds we would likely exceed the default 3 restarts so we are increasing the maximum number of allowed restarts to 10.

You should now see something similar to this in your console:

Starting process #PID<0.99.0>, number 1

Starting process #PID<0.100.0>, number 2

Starting process #PID<0.101.0>, number 3

#####

Terminating process #PID<0.100.0>, number 2

Starting process #PID<0.103.0>, number 2

#####

Terminating process #PID<0.99.0>, number 1

Starting process #PID<0.104.0>, number 1

#####

Terminating process #PID<0.101.0>, number 3

Starting process #PID<0.105.0>, number 3

#####

Terminating process #PID<0.103.0>, number 2

Starting process #PID<0.106.0>, number 2

We can see our three processes started at first. Then, as they randomly fail they get immediately replaced by new processes with the same number but a different PID. This is exactly what we expected, great!

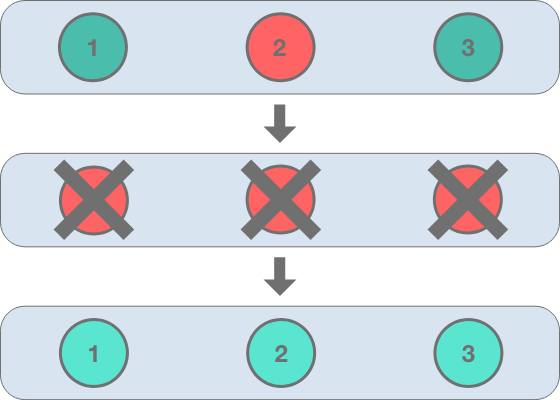

One for all

one_for_all strategy is similar to one_for_one but when one process dies all processes are killed and restarted. Using our example from above, when process number 2 crashes, processes 1 and 3 are killed as well:

We can reproduce it in the console. The only difference between the snippet of code below and the one above is the strategy option passed to start_link:

Supervisor.start_link(

[

Supervisor.child_spec({Boom, 1}, id: :boom_1),

Supervisor.child_spec({Boom, 2}, id: :boom_2),

Supervisor.child_spec({Boom, 3}, id: :boom_3)

],

strategy: :one_for_all,

max_restarts: 10

)

And here is the output:

Starting process #PID<0.100.0>, number 1

Starting process #PID<0.101.0>, number 2

Starting process #PID<0.102.0>, number 3

#####

Terminating process #PID<0.101.0>, number 2

Starting process #PID<0.104.0>, number 1

Starting process #PID<0.105.0>, number 2

Starting process #PID<0.106.0>, number 3

#####

Terminating process #PID<0.104.0>, number 1

Starting process #PID<0.107.0>, number 1

Starting process #PID<0.108.0>, number 2

Starting process #PID<0.109.0>, number 3

We can now see that whenever one process crashes all get restarted with a new PID. Just as we would expect.

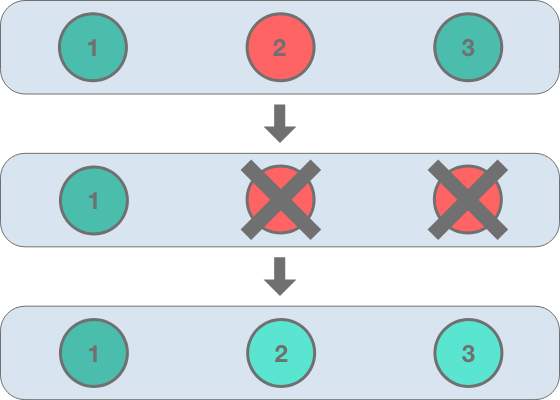

Rest for one

The previous two strategies do not consider the order in which processes are added to the supervisor. They either restart just the crashed process or all processes. rest_for_one is different. Whenever a process crashes rest_for_one will restart the crashed process and all processes that were started after it when the supervisor was initialized. The order in which processes are started matches the order of child specs passed to the supervisor's start_link function.

In our example this would mean that when process number 2 crashes processes 2 and 3 would get restarted but process 1 would stay intact:

Let's try this in iex, notice how we are changing the strategy option to rest_for_one:

Supervisor.start_link(

[

Supervisor.child_spec({Boom, 1}, id: :boom_1),

Supervisor.child_spec({Boom, 2}, id: :boom_2),

Supervisor.child_spec({Boom, 3}, id: :boom_3)

],

strategy: :rest_for_one,

max_restarts: 10

)

This is what I saw on my machine when running the code above:

Starting process #PID<0.100.0>, number 1

Starting process #PID<0.101.0>, number 2

Starting process #PID<0.102.0>, number 3

#####

Terminating process #PID<0.102.0>, number 3

Starting process #PID<0.104.0>, number 3

#####

Terminating process #PID<0.101.0>, number 2

Starting process #PID<0.105.0>, number 2

Starting process #PID<0.106.0>, number 3

#####

Terminating process #PID<0.106.0>, number 3

Starting process #PID<0.107.0>, number 3

#####

Terminating process #PID<0.100.0>, number 1

Starting process #PID<0.108.0>, number 1

Starting process #PID<0.109.0>, number 2

Starting process #PID<0.110.0>, number 3

As expected, whenever a process crashes it causes a restart of all the processes that followed it in the original list of arguments. A crash of the third process means that only the third process gets restarted whereas a crash of this first process means that all children get restarted with a new PID.

Dynamic Supervisor

One thing that the three strategies we have seen so far have in common is the fact that the list of children is somewhat static - it needs to be known at the time of initializing the supervisor. The supervisor's start_link function takes a list of child specs according to which processes will be started and supervised as long as the supervisor runs. If we wanted to add more processes to that list when the supervisor is already running we are kind of stuck. This is where DynamicSupervisor comes in.

Note that DynamicSupervior was introduced in Elixir 1.6 and replaced a now-deprecated strategy somewhat verbosly called simple_one_for_one which we are not covering here.

DynamicSupervisor allows us to add processes to it at any time, not just when it is started. It uses the one_for_one strategy but is not limited to supervising the same processes all the time. We can add to its process list as we see fit.

We'll look at a quick demo in the console. DynamicSupervisor is a process itself and we are going to start it without any children. Notice that we still need to pass a strategy in even though one_for_one is the only one it supports. We are also going to give it a name so that we don't need to track its PID. We'll use the familiar max_restarts option as well. Once the supervisor is started we'll immediately ask it to count its children using the count_children/1 function.

iex(1)> DynamicSupervisor.start_link(name: :boom_supervisor, strategy: :one_for_one, max_restarts: 10)

iex(2)> DynamicSupervisor.count_children(:boom_supervisor)

%{active: 0, specs: 0, supervisors: 0, workers: 0}

The supervisor is running but it does not have any children yet. Let's give it one to supervise.

iex(3)> DynamicSupervisor.start_child(:boom_supervisor, {Boom, 1})

Starting process #PID<0.95.0>, number 1

iex(4)> DynamicSupervisor.count_children(:boom_supervisor)

%{active: 1, specs: 1, supervisors: 0, workers: 1}

Looks like it worked, the supervisor has one child now. And sure enough we soon see it crashed and restarted:

#####

Terminating process #PID<0.95.0>, number 1

Starting process #PID<0.98.0>, number 1

#####

Terminating process #PID<0.98.0>, number 1

Starting process #PID<0.99.0>, number 1

Now, let's add a couple more children and confirm that they get restarted properly.

iex(5)> DynamicSupervisor.start_child(:boom_supervisor, {Boom, 2})

Starting process #PID<0.100.0>, number 2

iex(6)> DynamicSupervisor.start_child(:boom_supervisor, {Boom, 3})

Starting process #PID<0.102.0>, number 3

iex(7)> DynamicSupervisor.count_children(:boom_supervisor)

%{active: 3, specs: 3, supervisors: 0, workers: 3}

#####

Terminating process #PID<0.99.0>, number 1

Starting process #PID<0.104.0>, number 1

#####

Terminating process #PID<0.100.0>, number 2

Starting process #PID<0.105.0>, number 2

#####

Terminating process #PID<0.104.0>, number 1

Starting process #PID<0.106.0>, number 1

#####

Terminating process #PID<0.105.0>, number 2

Starting process #PID<0.107.0>, number 2

#####

Terminating process #PID<0.102.0>, number 3

Starting process #PID<0.108.0>, number 3

We can see that the new processes have been correctly started and get supervised using the one_for_one strategy. Excellent!

Summary

This concludes our brief tour of supervision strategies available in Elixir. We have seen how one_for_one, one_for_all and rest_for_one strategies can be used to control the way processes get restarted in case of a crash. We have also touched upon the fact that standard supervisors take a static list of processes and that we have the DynamicSupevisor at our disposal if we need to add children more flexibly. We also got our hands dirty and saw processes crashing and restarting right in front of us in iex sessions.

Elixir processes and the various ways in which they can be supervised are one of those aspects of the language that I find the most fascinating. They allow us to organise our code and think about our domain problems in a radically different way. Various different supervision strategies we have at our disposal help us make our systems resilient and self-healing and, ultimately, make our lives easier as well.